A short description of what is DevOps and how it helps companies to compete in their business with a faster innovation, followed by a demonstration of how the Cisco multicloud portfolio helps in the adoption of DevOps practices [see also

this post for more detail].

If your time for reading is limited, here is the structure of the post: you can jump to the paragraphs you are interested in.

(The business view starts here)

The need for digital innovation.

New services, better quality

Frequent releases

DevOps is not a technology or a product.

Cultural change and collaboration (break silos in the organization)

Small teams responsible for a service’s lifecycle end to end

DevOps principles.

Feedback loop

CI/CD – Continuous Integration and Continuous Deployment

(The technical view starts here)

Cisco Multicloud approach.

Cisco CloudCenter Suite (CCS)

Cisco Container Platform (CCP)

Our lab

Application

Infrastructure

Demo flow

Implementation

Conclusions

DevOps makes it faster and easier

Cultural change is needed (incentives)

Cisco offers an effective toolset to help the adoption of DevOps practices

The need for digital innovation.

Whatever is your business, your customers expect more and more services, greater efficiency and value added by innovation.

Providing new business services (generally supported by software applications) to customers and anticipating your competitors’ moves attracts new customers and retains the existing ones.

Often the lines of business are not satisfied with the support they receive from the corporate IT in terms of flexibility and speed to start a new project, especially if new technologies or skills are required (e.g. cloud native applications).

A better perceived quality of IT depends also on the frequency of the release of fixes for broken services and on the process to avoid that bugs reach the production environment, being intercepted in good functional and reliability tests.

Frequent releases and the quality of the code can benefit a lot from automation in all the phases of a software project, though the end to end automation is not absolutely necessary: it is just much better. The fundamental pillars are a good organization of the work and processes that ensure a coverage of every need (no gaps in the responsibility, no grey area in communication among different departments, shared objectives instead of finger pointing).

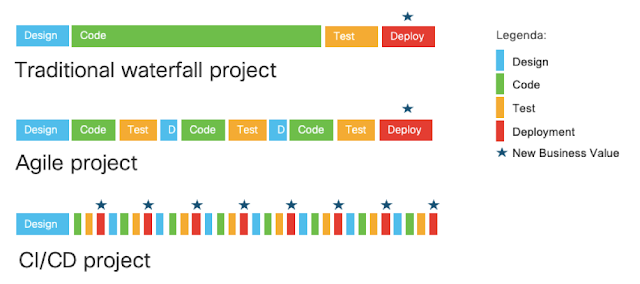

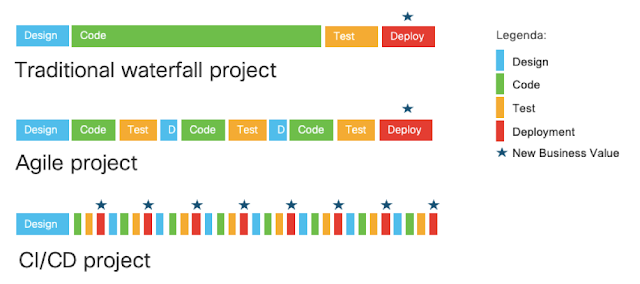

Next picture shows the evolution of methodologies and the impact on the value perceived by the business. The small star represents the instant when business value is realized by a release of the application in production.

With the traditional waterfall projects, it happens only at the end of the project (by the way, with a lot of uncertainty due to delays and unexpected troubles during the development and the test phases).

The agile methodology reduces the risk, repeating shorter cycles of design, coding and testing that can address any surprise and correct the course of the project sooner. But the deployment in production still happens at the very end of the project.

The innovation allowed by Continuous Integration and Continuous Deployment brings the application in production at every cycle (new releases or bug fixing) ensuring optimal quality and a deterministic outcome: the business will appreciate a benefit in terms of time to market for their initiatives.

|

| Picture 1 - CI/CD offers more business value |

DevOps is not a technology or a product.

DevOps means collaboration between Developers and Operations.

The work of who is responsible for design and implementation of the code does not finish when a new build of the application is released. Developers should also collaborate in testing the system, releasing it in production, operating and measuring its KPI.

The Operation team should not just execute a defined process to maintain the system but should collaborate since the design phase of the application and, most importantly, provide a constructive feedback from the production environment that helps improving and extending the application in next development cycles.

A cultural change.

A cultural change (breaking silos in the organization) should be promoted, with incentives and gradual adoption of practices that will improve with time: the entire organization and the individuals have to digest a new way of working, openly analyze the outcome, contribute to the progress with personal feedback and suggestions.

Everybody should feel that they have a common goal and they are collaborating to everyone’s success.

Small teams responsible for a service’s lifecycle end to end.

DevOps practices suggest that the entire lifecycle of a service is managed by a single team: from the inception phase and the requirements analysis, to the implementation, test, release and operations. They can be more efficient – a provide a better quality – if they know everything about the service and they can react to any problem quickly, as well as evolving it based on new requirements.

The team should include representatives from different departments (lines of business, IT Architecture, Operations…) that bring their skill and experience, so a new organizational model can be required in your company: maybe a dotted line reporting structure with functional responsibilities.

It is not necessary to build a team for each service: some services can be grouped in one team, especially if they belong to the same business area or if they are the building blocks for a composite application (in a microservices architecture).

DevOps principles.

The First Way – Systems Thinking

• Understand the entire flow of work

• Seek to increase the flow of work

• Stop problems early and often – Don’t let them flow downstream

• Keep everyone thinking globally

• Deeply understand your systems

First Way Goals

• One source of truth – Code, environment and configuration in one place

• Consistent release process – Automation is essential (one click)

• Decrease cycle times, Faster release cadence

The Second Way – Feedback Loops

• Understand and respond to the needs of all customers (internal and external)

• Shorten and amplify all feedback loops

• With feedback comes quality

Second Way Goals

• Defects and performance issues fixed faster

• Ops and InfoSec user stories appear as part of the application

• Everyone is communicating better

• More work getting done

The Third Way – Synergy

• Consistent process and effective feedback result in agility

• Now use that agility to experiment

• You only learn from failure – So fail often, but recover quickly

Third Way Goals

• Ability to anticipate, even define new business needs through visibility in the systems

• Ability to test and optimize new business opportunities in the system while managing risk

• Joy

Now that you have got an idea of what is DevOps, let’s have a look at a solution from Cisco that could make it easier to adopt DevOps practices. Remember that DevOps cannot be bought: it is the set of good practices that you define and refine based on continuous improvement based on direct experience. Automation is only a part of the story.

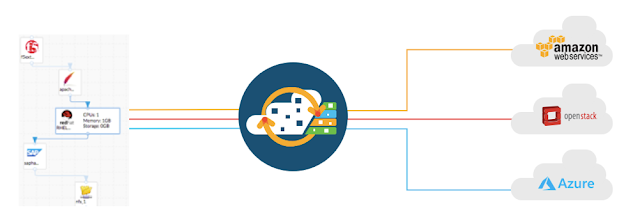

Cisco Multicloud approach.

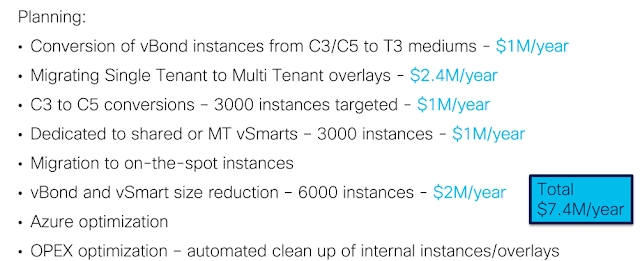

Cisco knows that many customers are using at least one private or public cloud, but most of them use at least two: that implies a need for consistent governance, security, networking, analytics and automation that apply to every environment.

The multicloud portfolio includes products, and reference architectures to make the adoption simpler, that span all the technologies mentioned above.

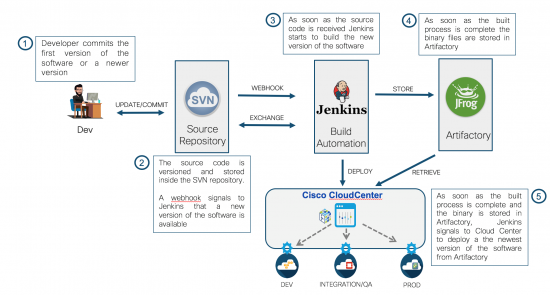

This post explains how we have built a demo using products in the automation bucket to support a DevOps use case (i.e. Continuous Integration and Continuous Deployment, aka CI/CD).

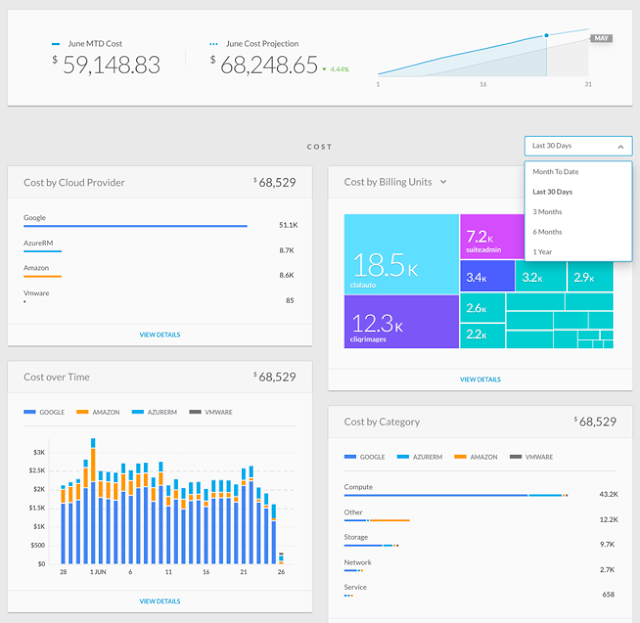

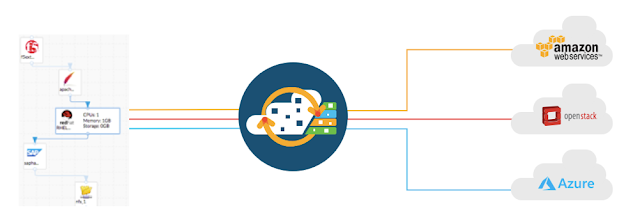

Cisco CloudCenter Suite

A solution to help the IT organization to sustain the pressure from developers and lines of business to deploy and operate a large number of applications and middleware platforms, made more complex by the availability of different possible targets (private and public clouds for running VM and containers).

|

| Picture 2 - CloudCenter addresses the many-to-many complexity |

The CloudCenter Suite is a single tool to automate the deployments and broker resources from any cloud. It helps to enforce a single governance model including cost control, approval processes, security policies and consistent architecture across different clouds.

You don’t have to learn and use separate tools from the cloud providers, neither to replicate the automation blueprints using the native automation technologies in each cloud (e.g. Cloud Formation, Heat, Powershell): you only create a single model and CloudCenter translates it into call to the specific API exposed by each private and public cloud and Kubernetes clusters.

|

| Picture 3 - CloudCenter translates a single blueprint to API calls for all clouds |

Everything you do in CloudCenter can be done through its API, that makes it easy to orchestrate it externally (e.g. from Jenkins, through a plugin that Cisco ships so that you can insert multicloud deployments in your CI/CD pipeline).

The current version of the CloudCenter Suite also includes additional modules like the Cost Optimizer and the Action Orchestrator: a useful enhancement to create a governance model and make operations easy in a heterogeneous multi-cloud environment.

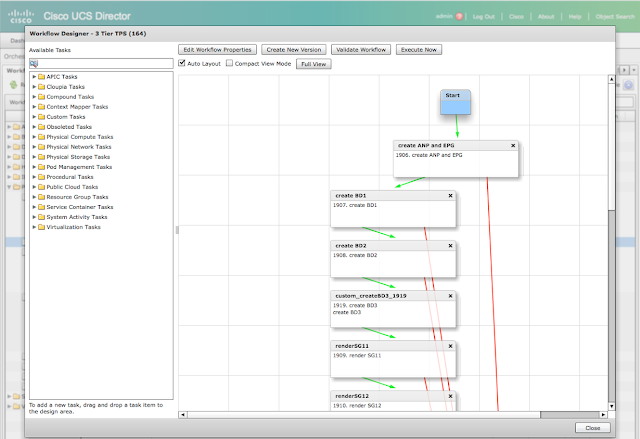

Cisco Container Platform

Another software product form Cisco, that you can see as a tool for Operations to create and manage enterprise grade Kubernetes clusters.

It creates, fully configures and manages (upgrades, scales, monitors) Kubernetes clusters on-premises and in the public cloud for you.

It takes care of all the complexity of the integration with networking (options offered out of the box are Calico, Contiv and Cisco ACI), storage, security (SSO and RBAC are added to Kubernetes) as well as centralized monitoring and logging while shipping 100% open source binaries from the upstream repositories.

Our lab

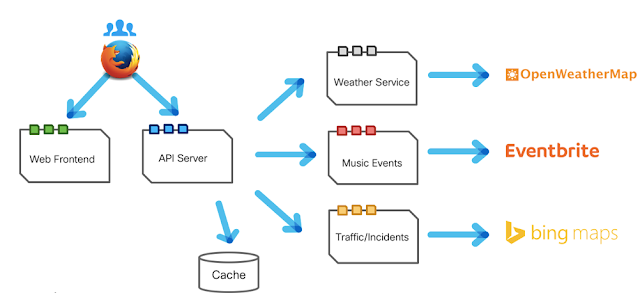

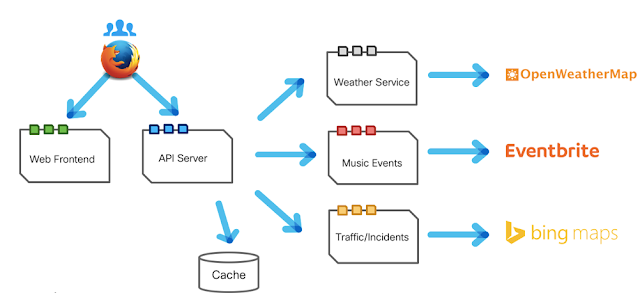

We have built a simple application based on a microservices architecture, as shown by the picture.

|

| Picture 4 - the microservices application built for the demo |

The source code of the 5 components is stored in a github repository, where new versions of the application are committed (saved) by developers. At each commit, the Jenkins orchestrator gets the source code and compiles it, building the container images ready to deploy the application.

The images are saved in a shared container registry (Harbor, see next picture) where Cisco CloudCenter will be able to retrieve them when asked by Jenkins to deploy the application. Based on input parameters provided by Jenkins, CloudCenter will target the deployment to the most appropriate environment for the current phase of the project.

In our demo lab, the environments are “integration test”, “performance test” and “production”.

They correspond to three different Kubernetes clusters that have been created in the private cloud (the first two) and in the public cloud (for the production environment).

Each environment has different policies set, that will be inherited by every application that is deployed there: for security, networking, autoscaling, etc.

The 3 Kubernetes clusters mentioned above have been generated by the Cisco Container Platform (though we could have created them manually in each cloud). The value in using CCP consists in consistent operations, speed and easiness: in few minutes we created 3 production-ready clusters, fully integrated with networking, storage, security, monitoring and logging without even touching the K8s installer or the underlying infrastructure.

The 2 clusters named “integration test” and “performance test” were created automatically inside VM in a local vmware environment, while the cluster named “production” was created in the Amazon cloud (CCP uses the API exposed by the Amazon EKS service to do everything automatically, including the integration with the AWS IAM for security).

The automated deployments will repeat, in the three environments, in a sequence that alternates them with the necessary tests and ensures the quality of the release. Though in the real world you might want to run more complex testing activities, this is a meaningful example of the efficiency you can achieve thanks to full automation of the process.

The pipeline can still be extended by adding additional tests like quality code inspection and more.

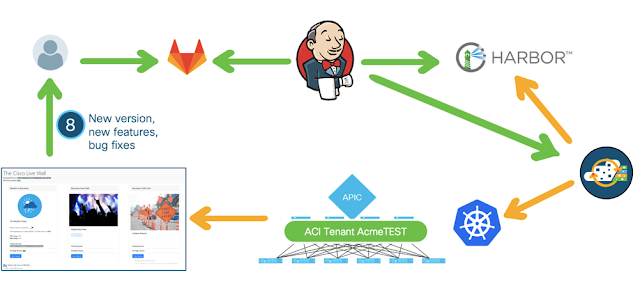

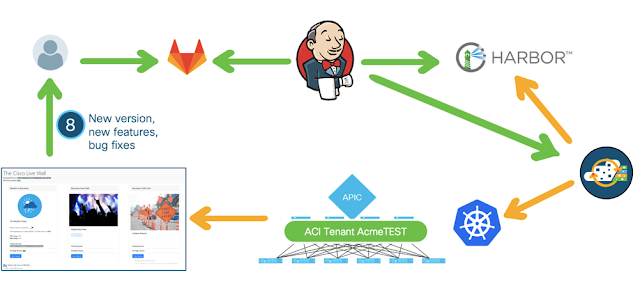

|

| Picture 5 - the CI/CD cycle |

Demo flow

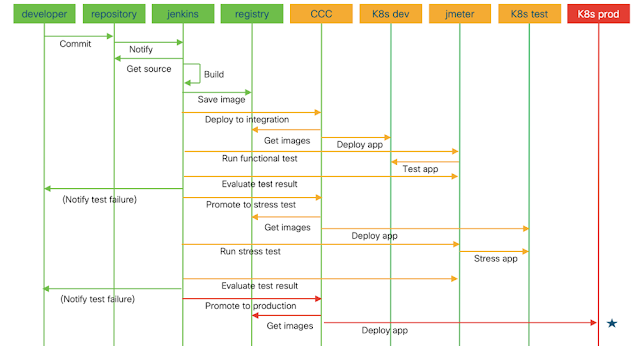

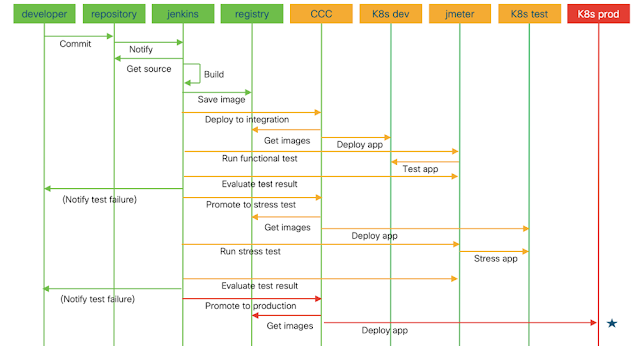

Next picture is a sequence diagram showing all the actions that we have automated.

We used a color code to represent the phases that are commonly referred to as Continuous Integration (the green part) and Continuous Deployment (the orange part).

CCC stands for Cisco CloudCenter, where K8s dev, test and prod represent the 3 Kubernetes clusters mentioned above.

The entire process is completely automated and brings a new version of the application to the production deployment without any human intervention.

This complete automation is often referred to as Continuous Deployment and, though very useful and adopted by big players like Facebook (their pipeline is more complex than our simplified demo) is not very common among the customers I generally meet.

Those that adopted DevOps still prefer to have some human checks in between the activities, so that they feel they have a better control on the process and its quality.

When they have more experience, probably they will be confident enough to delegate every check to the automation tools.

|

| Picture 6 - a sequence diagram showing the automated actions |

Implementation

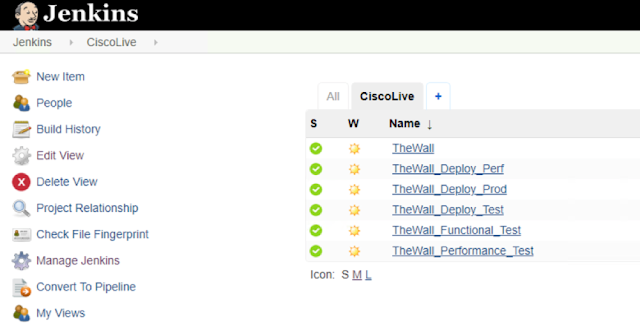

The automation is based on

Jenkins, an open source orchestrator that benefits from the availability of hundreds of plugins: it can automate almost every component in your IT ecosystem, including Cisco CloudCenter of course.

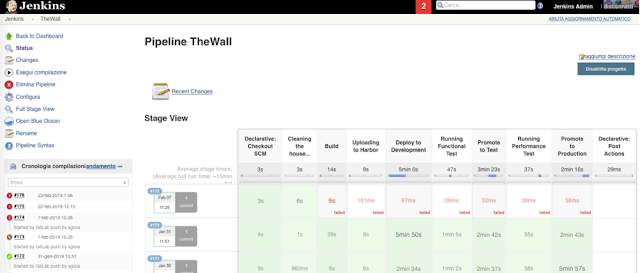

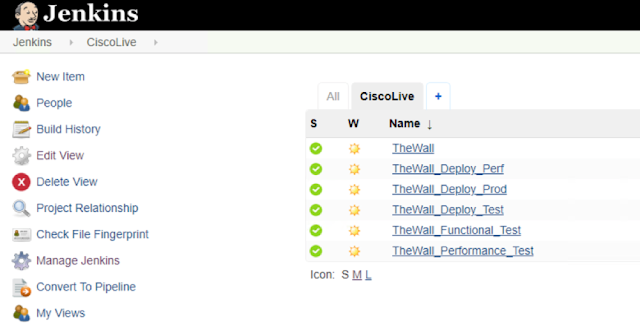

In the Jenkins dashboard you can build different projects, like in the picture below. A project is a sequence of steps, using plugins to drive activities in the systems you want to automate (e.g. pull the source code from the repository, compile it, build containers images, trigger a cloud deployment through CloudCenter, etc.).

|

| Picture 7 - Jenkins projects |

Projects can call other projects, to make your orchestration modular and reusable. In the picture above, the project TheWall (that is the name of our demo application) calls the other 5 projects in a sequence, checking that the outcome is positive before calling next project.

So, we are able to automate the deployments in the 3 Kubernetes clusters and to run the functional test and the performance test of the application using an external tool (we used another open source product called

Apache Jmeter).

The functional test is a sequence of user transactions, executed by the test tool using a pool of user identities and a pool of input data, where assertions about the expected result are validated automatically. If the page generated by the application differs from the expected result, an error is logged, and the test can be considered failed. So, the functional test ensures that the application behaves as expected from a functional standpoint (and you can avoid a manual test for user acceptance).

The performance test, executed by the same tool, stresses the application and the infrastructure from a performance standpoint. A large number of concurrent users are simulated by the tool, invoking a sequence of user transactions with random wait time, reproducing a situation similar to the workload in a production environment. Response times are tracked and so are eventual errors, allowing the tool to declare that the test is successful or not.

Based on the outcome produced by Jmeter, the Jenkins orchestrator will continue with the Continuous Deployment pipeline or abort it, notifying the developers that something went wrong and a correction is required.

In this case, the CI/CD cycle will restart from the beginning: source code modified and committed, application built and deployed to the first environment, test executed, application promoted to next environment and tested… until the pipeline is completely executed without any warning or error and the application is released automatically in production.

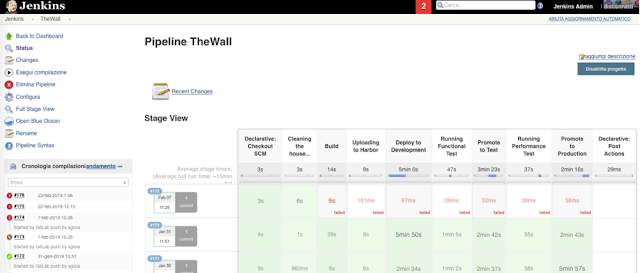

Next picture shows the execution of the Jenkins pipeline for three different builds of the application. The most recent execution failed because the modification of the source code introduced an error that blocked the build. The other two executions succeeded, as demonstrated by the green color of every step in the pipeline.

|

| Picture 8 - Jenkins pipeline |

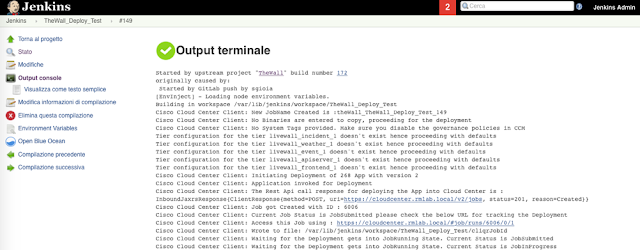

Jenkins logs all the activities, so that you can check what’s happened during the automated process.

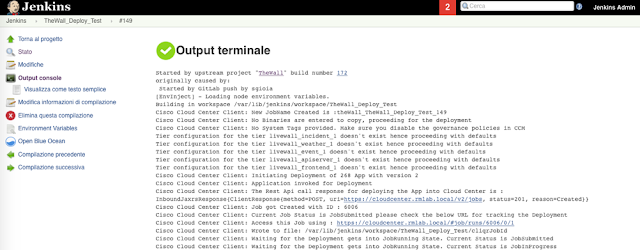

Next picture shows the output of the sub-project named TheWall_Deploy_Test, that is the 7th stage in the pipeline in previous picture.

It uses the API exposed by CloudCenter to deploy the application “TheWall” to a test environment running Kubernetes, that is robust enough to sustain the workload of the performance test (while the functional test can be executed also in a smaller cluster with less computing power).

|

| Picture 9 - output from the Jenkins CI/CD pipeline |

You don’t have to code the API calls, because CloudCenter ships a plugin for Jenkins that integrates into its user interface graphically. But if you prefer, Jenkins can run scripts and commands from the CLI for you.

Conclusions

DevOps makes it faster and easier

If you adopt a DevOps methodology you bring agility to an extreme and get a business outcome from the fast release of applications.

Cultural change is needed (use incentives)

DevOps is not a matter of technology. Your people need to work in a different way: no finger pointing between Developers and Operations, no “it’s not my job”, everybody should commit towards a common goal and enjoy their common achievement.

Initially it will be difficult, you have to teach them little by little. Offer incentives to people that shows a collaborative attitude and a spirit of innovation, let them feel like the heroes in the new adventure and grant that failures will not create any trouble. You learn from your mistakes and there is no magic wand to start directly with a perfect solution.

In addition, also traditional methodologies generate project failures: the difference is that DevOps anticipate problems and you discover them sooner, so the business impact is much smaller.

Cisco offers an effective toolset to help the adoption of DevOps practices

We all agree that DevOps is not a product. But once you start working you will see that automation helps the CI/CD process to be fluent. You can find great opens source (and free) tools – e.g. Jenkins, Jmeter, Ansible – to support your project teams, but if you also adopt Cisco CloudCenter and the Cisco Container Platform your professional life will be much easier.

Credits

The demo lab described in the post has been built with two colleagues and friends, that I want to thank here: Stefano Gioia and Riccardo Tortorici.

References